[This blog post is more nuanced version of my HBR article titled Curiosity Driven Data Science.]

The real value of data science lies not in making existing processes incrementally more efficient but rather in the creation of new algorithmic capabilities that enable step-function changes in value. However, such capabilities are rarely asked for in a top-down fashion. Instead, they are discovered and revealed through curiosity-driven tinkering by data scientists. For companies ready to jump on the data science bandwagon I offer this advice: think less about how data science will support and execute your plans and think more about how to create an environment to empower your data scientists to come up with ideas you’ve never dreamed of.

At Stitch Fix, we have more than 100 data scientists who have created several dozens of algorithmic capabilities, generating 100s of millions of dollars in benefits. We have algorithms for recommender systems, merchandise buying, inventory management, client relationship management, logistics, operations—we even have algorithms for designing clothes! Each provides material and measurable returns, enabling us to better serve our clients, while providing a protective barrier against competition. Yet, virtually none of these capabilities were asked for—not by executives, product managers, or domain experts, and not even by a data science manager. Instead, they were born out of curiosity and extracurricular tinkering by data scientists.

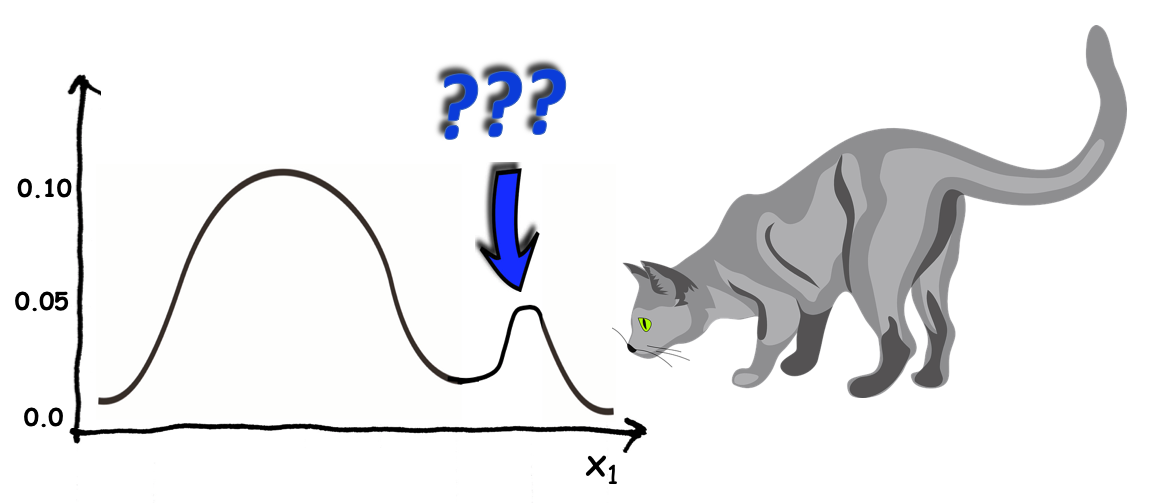

Data scientists are a curious bunch, especially the talented ones. They work towards stated goals, and they are focused on and accountable to achieving certain performance metrics. But they are also easily distracted—in a good way. In the course of doing their work they stumble on various patterns, phenomena, and anomalies that are unearthed during their data sleuthing. This goads the data scientist’s curiosity: “Is there a latent dimension that can characterize a client’s style?” “If we modeled clothing fit as a distance measure could we improve client feedback?” “Can successful features from existing styles be systematically re-combined to create better ones?” Such curiosity questions can be insatiable and the data scientists knows the answers lie hidden in the reams of historical data. Tinkering ensues. They don’t ask permission (eafp). In some cases, explanations can be found quickly, in only a few hours or so. Other times, it takes longer because each answer evokes new questions and hypotheses, leading to more tinkering. But the work is contained to non-sanctioned side-work, at least for now. They’ll tinker on their own time if they need to—evening and weekend if they must. Yet, no one asked them to; curiosity is a powerful force.

Are they wasting their time? No! Data science tinkering is typically accompanied by evidence for the merits of the exploration. Statistical measures like AUC, RMSE, and R-squared quantify the amount of predictive power the data scientist’s exploration is adding. They are also equipped with the business context to allow them to assess viability and potential impact of a solution that leverages their new insights. If there is no “there” there, they stop. But, when compelling evidence is found and coupled with big potential, the data scientist is emboldened. The exploration flips from being curiosity-driven to impact-driven. “If we incorporate this latent style space into our styling algorithms we can better recommend products.” “This fit feature will materially increase client satisfaction.” “These new designs will do very well with this client segment.” Note the difference in tone. Much of the uncertainty has been allayed and replaced with potential impact. No longer satisfied with mere historical data, the data scientist is driven to more rigorous methods—randomized controlled trials or “AB Testing,” which can provide true causal impact. She wants to see how her insights perform in real life. She cobbles together a new algorithm based on the newly revealed insights and exposes it to a sample of clients in an experiment. She’s already confident it will improve the client experience and business metrics, but she needs to know by how much. If the experiment yields a big enough win, she’ll roll it out to all clients. In some cases, it may require additional work to build a robust capability around her new insights. This will almost surely go beyond what can be considered “side work” and she’ll need to collaborate with others for engineering and process changes. But she will have already validated her hypothesis and quantified the impact, giving her a clear case for its prioritization within the business.

The essential thing to note here is that no one asked the data scientists to explore. Managers, PMs, domain experts—none of them saw the unexplained phenomenon that the data scientist stumbled upon. This is what tipped her off to start tinkering. And, the data scientist didn’t have to ask permission to explore because it’s low-cost enough that it just happens fluidly in the course of their work, or they are compelled by curiosity to flesh it out on their own time. In fact, if they had asked permission to explore their initial itch, managers and stakeholders probably would have said “no.” The insights and resulting capabilities are often so unintuitive and/or esoteric that, without the evidence to support it, they don’t seem like a good use of time or resources.

These two things—low cost exploration and empirical evidence—set data science apart from other business functions. Sure, other departments are curious too: “I wonder if clients would respond better to this this type of creative?” a marketer might ponder. “Would a new user interface be more intuitive?” a product manager inquires, etc. But those questions can’t be answered with historical data. Exploring those ideas requires actually building something, which is costly. And justifying the cost is often difficult since there’s no evidence that suggests the ideas will work. But with data science’s low-cost exploration and risk-reducing evidence, more ideas are explored which, in turn, leads to more innovation.

Sounds great, right? It is! But this doesn’t happen by will alone. You can’t just declare as an organization that “we’ll do this too.” This is a very different way of doing things. Many established organizations are set up to resist change. Such a new approach can create so much friction with the existing processes that the organization rejects it in the same way antibodies attack a foreign substance entering the body. It’s going to require fundamental changes to the organization that extend beyond the addition of a data science team. You need to create an environment in which it can thrive.

First, you have to position data science as its own entity. Don’t bury it under another department like marketing, product, engineering, etc. Instead, make it its own department, reporting to the CEO. In some cases the data science team can be completely autonomous in producing value for the company. In other cases, it will need to collaborate with other departments to provide solutions. Yet, it will do so as equal partners—not as a support staff that merely executes on what is asked of them. Recall that most algorithmic capabilities won’t be asked for; they are discovered through exploration. So, instead of positioning data science as a supportive team in service to other departments, make it responsible for business goals. Then, hold it accountable to hitting those goals—but enable the data scientists to come up with the solutions.

Next, you need to equip the data scientists with all the technical resources they need to be autonomous. They’ll need full access to data as well as the compute resources to process their explorations. Requiring them to ask permission or request resources will impose a cost and less exploration will occur. My recommendation is to leverage a cloud architecture where the compute resources are elastic and nearly infinite.

The data scientists will also need to have the skills to provision their own processors and conduct their own exploration. They will have to be great generalists. Most companies divide their data scientists into teams of functional specialists—say, Modelers, Machine Learning Engineers, Data Engineers, Causal Inference Analysts, etc. While this may provide greater focus, it also necessitates coordination among many specialists to pursue any exploration. This increases costs and fewer explorations will be conducted. Instead, leverage “full-stack data scientists” that possess varied skills to do all the specialty functions. Of course, data scientists can’t be experts in everything. Providing a robust data platform can help abstract them from the intricacies of distributed processing, auto-scaling, graceful degradation, etc. This way the data scientist focuses more on driving business value through testing and learning, and less on technical specialty. The cost of exploration is lowered and therefore more things are tried, leading to more innovation.

Finally, you need a culture that will support a steady process of learning and experimentation. This means the entire company must have common values for things like learning by doing, being comfortable with ambiguity, and balancing long- and short-term returns. These values need to be shared across the entire organization as they cannot survive in isolation.

Before you jump in and implement this at your company, be aware that it will be hard if not impossible to implement at an older, more established company. I’m not sure it could have worked, even at Stitch Fix, if we hadn’t enabled data science to be successful from the very beginning. Data Science was not “inserted” into the organization. Rather, data science was native to us even in the formative years, and hence, the necessary ways-of-working are more natural.

This is not to say data science is necessarily destined for failure at older, more mature companies, although it is certainly harder than starting from scratch. Some companies have been able to pull off miraculous changes. It’s too important not to try. The benefits of this model are substantial, and for companies that have the data assets to create a sustaining competitive advantage through algorithmic capabilities, it’s worth considering whether this approach can work for you.

Postscript

People often ask me, “Why not provide the time for data scientists to be creative like Google’s 20 percent time?” We’ve considered this several times after seeing many successful innovations emerge from data science tinkering. In spirit, it’s a great idea. Yet, we have concerns that a structured program for innovation may have unintended consequences.

Such programs may be too open-ended and lead to research where there is no actual problem to solve. The real examples I depicted above all stemmed out of observation—patterns, anomalies, an unexplained phenomenon, etc. They were observed first and then researched. It’s less likely to lead to impact the other way around.

Structured programs may also set expectations too high. I suspect there would be a tendency to think of the creative time as a PhD dissertation, requiring novelty and a material contribution to the community (e.g., “I’d better consult with my manager on what to spend my 20 percent time on”). I’d prefer a more organic process that is driven from observation. The data scientists should feel no shame in switching topics often or modifying their hypotheses. They may even find that their stated priorities are the most important thing they can be doing.

So, instead of a structured program, let curiosity drive. By providing ownership of business goals and generalized roles, tinkering and exploration become a natural and fluid part of the role. In fact, it’s hard to quell curiosity. Even if one were to explicitly ban curiosity projects, data scientists would simply conduct their explorations surreptitiously. Itches must be scratched!